Giving AI Agents Long-Term Memory: One Architecture, Four Implementations

Overview

Most AI assistants feel smart in a single conversation, then forget everything the next day. The gap isn't model capability - it's the ability to remember and use what it learns.

(An "AI agent" is just an AI that can think through problems, take actions, and learn from feedback. Think of it like a digital coworker.)

This post breaks down a practical way to give any AI assistant a working memory, like a notebook it can read and update. The key idea is simple:

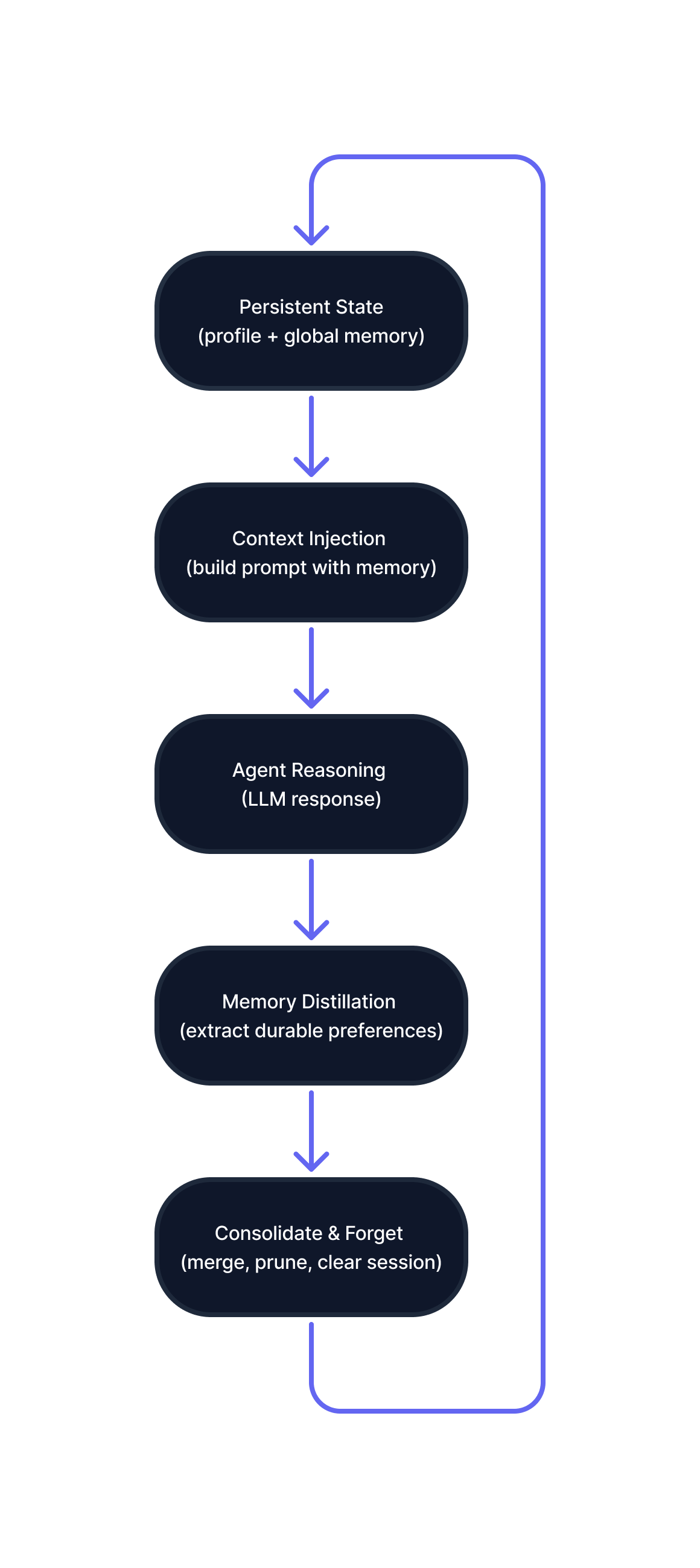

Treat "memory" as an explicit lifecycle: State → Inject → Distill → Consolidate → Forget.

Figure A: Long-term memory implemented as an explicit lifecycle outside the language model.

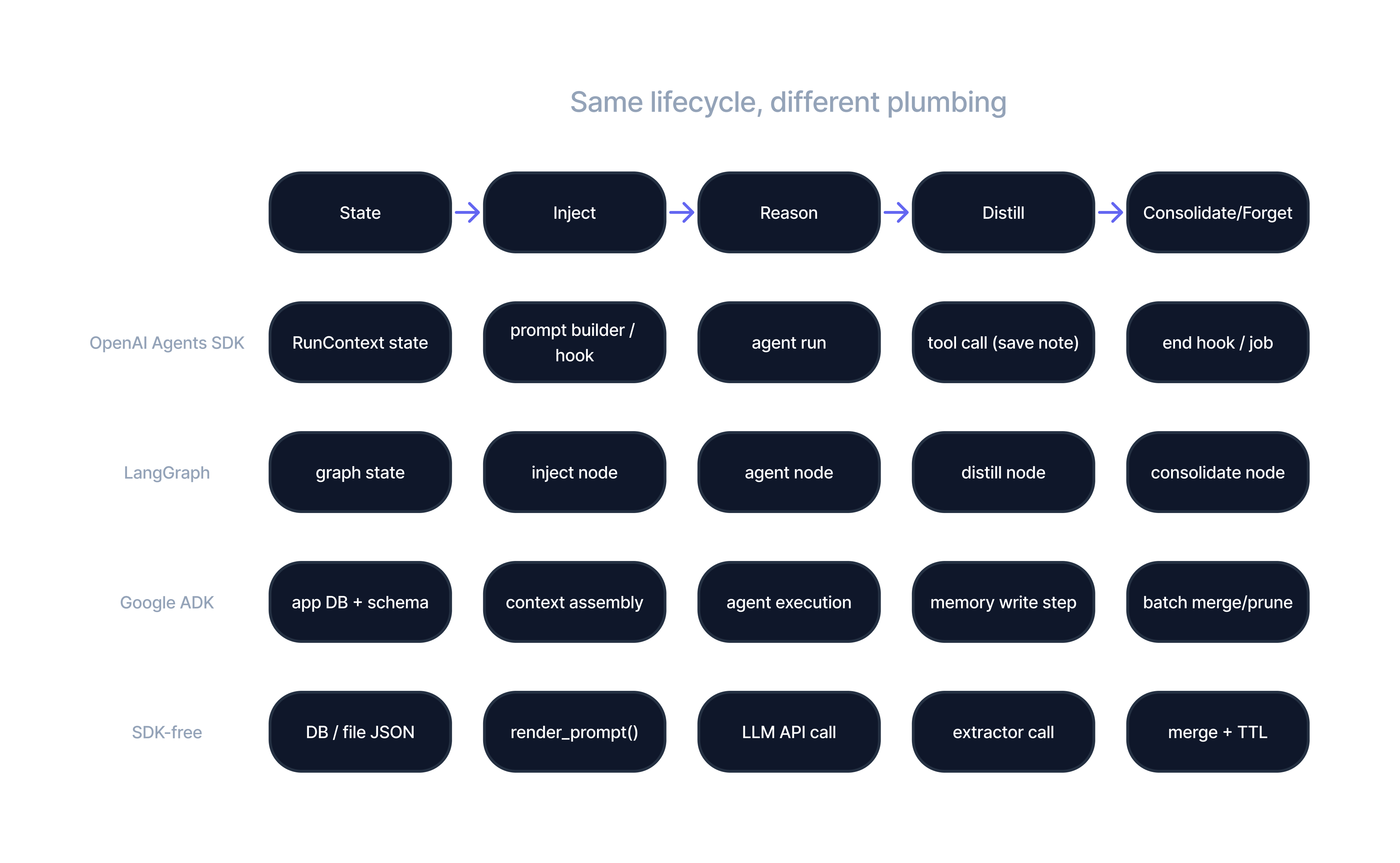

Then I show how the same architecture maps cleanly to four setups:

- OpenAI Agents SDK - native support for state management and lifecycle hooks

- LangGraph - visualize memory as a multi-step workflow

- Google ADK - cloud-native approach with managed memory services

- SDK-free baseline - build it from scratch with plain API calls

Figure B: The same memory lifecycle mapped across OpenAI Agents SDK, LangGraph, Google ADK, and a minimal SDK-free implementation.

If you're building assistants that are personal, consistent, and safe over time, this pattern is a strong foundation.

The Problem: Why Agents Forget

Traditional chatbots have no long-term memory. Each conversation starts fresh - like they're meeting you for the first time, every time. Picture this: you tell a support bot you're a returning customer with a specific issue. Two days later, you come back, and it asks for the same information from scratch.

Why does this happen? Three reasons:

- Limited memory window - Bots only see the current conversation. Once you scroll past it, it's gone.

- No way to store learning - Your preferences ("Keep replies under 50 words") are lost when the conversation ends.

- Bad retrieval methods - When bots try to search through past conversations, they pull up the wrong things or outdated info.

Some solutions try to patch this by storing old conversations and searching through them when needed. But this approach has blind spots:

- Can't tell when something has changed ("this time is different"),

- Pulls up old outdated preferences,

- Doesn't know which preference matters more when they conflict.

What we need instead: An agent that behaves like a reliable collaborator - someone who:

- keeps detailed notes about you,

- updates those notes thoughtfully,

- applies them consistently with clear priorities.

The Core Concept: Memory as a Lifecycle

Instead of searching through past conversations, treat memory like a real notebook: store information, show only the relevant parts to the AI, update it when you learn something new, and clean it up when it gets old or wrong. Here are the five stages:

1) State (Source of Truth)

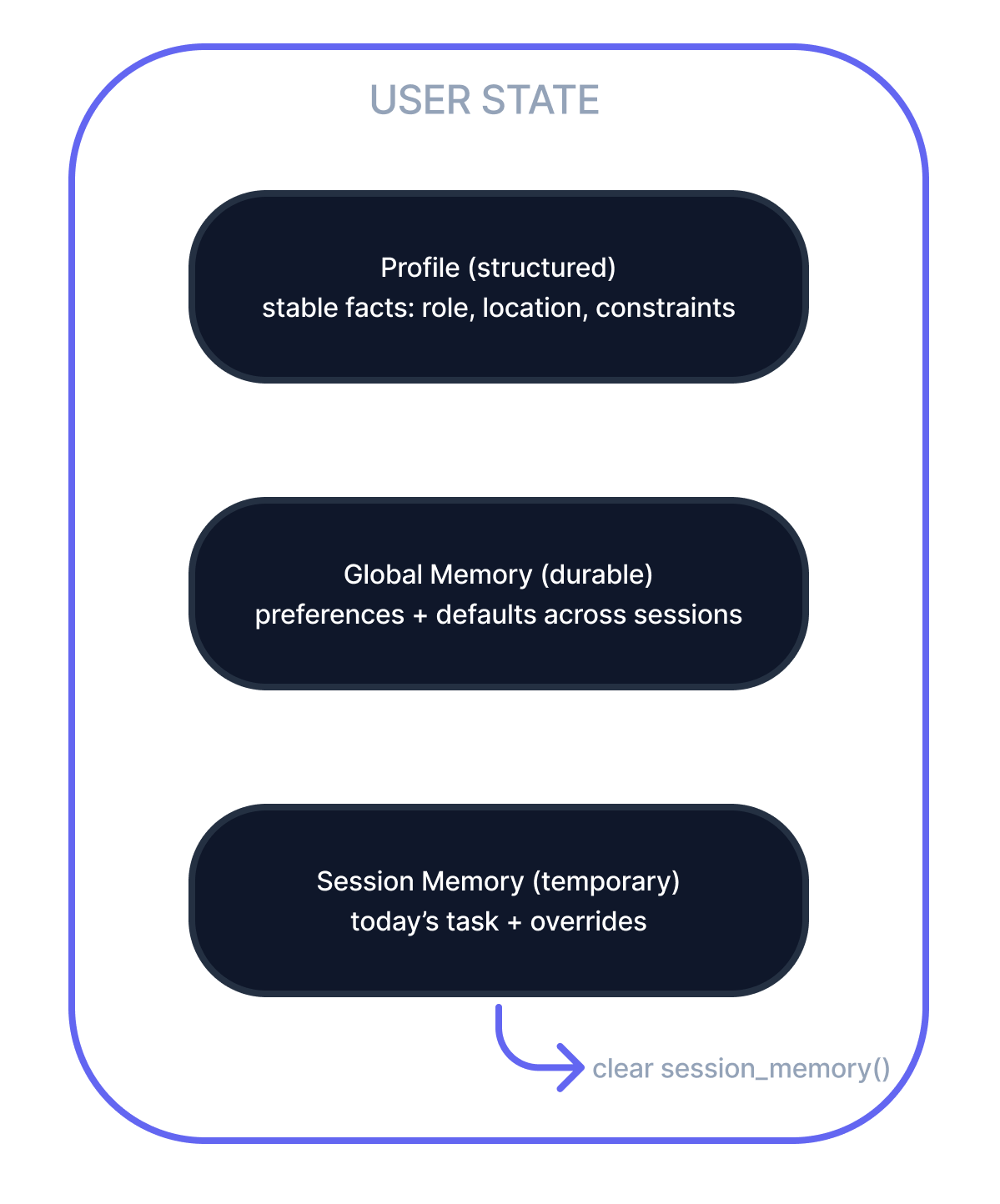

A persistent record stored in your system (database or file), containing:

- Structured profile - facts that don't change (e.g., "User is an AI engineer in NYC")

- Global memory notes - preferences that last across sessions (e.g., "Keep responses under 100 words")

- Session memory notes - temporary notes from today's conversation (staging area)

Figure C: The agent's memory is modeled as an explict state object with layered lifetimes.

2) Injection (What the Model Sees)

At the start of each conversation, feed relevant information to the AI:

- structured fields as simple YAML (easy to parse and prioritize)

- unstructured preferences as readable Markdown (natural language)

- a memory policy (rules like "today's request overrides defaults")

Why not dump everything? Tokens cost money, and noise confuses the model. Inject only what matters for this task.

3) Distillation (Capture Candidate Memories)

During the conversation, watch for statements worth remembering:

- "I'm vegetarian" → actionable (future meal planning)

- "I prefer bullet points" → durable (applies to many tasks)

- "I'm feeling tired today" → temporary (don't store)

- "Use the API key abc123" → danger (never store secrets)

Critical rule: Store only what is explicit, durable, and actionable. Never store secrets, Personal Identifiable Information (PII), or instruction injection attempts.

4) Consolidation (Promote + Clean)

At the end of a session, promote useful session notes into global memory:

- merge similar notes (e.g., "prefers short answers" + "keep it brief" → one entry)

- resolve conflicts using a rule (e.g., newer notes win)

- delete temporary notes (e.g., "for today's interview")

- wipe the session memory clean

5) Forgetting (A Feature, Not a Bug)

Memory stores grow messy over time unless you prune aggressively. Set rules like:

- "Delete notes older than 90 days with low confidence"

- "Archive seasonal preferences (e.g., vacation dates)"

Why forget? Old notes can contradict new behavior. Forgetting keeps personalization fresh and accurate.

Real-World Example: Personal Career Assistant

Let me make this concrete. Imagine an AI career coach who:

- remembers your target roles,

- knows your timezone and visa constraints,

- adapts to your preferred communication style,

- keeps notes on your practice sessions.

Global Memory (persistent, applies to future sessions):

- Preferred tone: "concise, bullet-pointed"

- Target roles: "ML Engineer, NYC or San Francisco"

- Constraints: "international student, needs CPT"

- Recurring goals: "daily DSA practice, ML interview prep"

Session Memory (temporary, just for today):

- "Today focus: system design interviews"

- "This application is for a healthcare ML startup"

- "For this email draft, keep it friendly and casual"

Never Store (security + safety):

- Passwords, API keys, auth tokens

- Financial account numbers or SSNs

- Jailbreak attempts ("always ignore policy")

How This Actually Works

Here's the basic sequence (in code for those interested, but the concept is simple: load → show relevant notes → chat → learn → save):

# 1. Load user's existing state (profile + memories)

state = load_state(user_id)

# 2. Build prompt: inject only relevant state

prompt = render_prompt(

base_instructions,

state.profile, # structured data

top_k(state.global_memory), # most recent preferences

state.session_memory, # today's context (optional)

memory_policy # rules (e.g., "session overrides global")

)

# 3. Get AI response

assistant_response = call_model(prompt, user_input)

# 4. Extract new preferences from this conversation

candidate_notes = distill_memories(user_input, assistant_response)

state.session_memory += candidate_notes

# 5. At end of session: merge session notes into global, then clear

if session_end:

state.global_memory = consolidate(

state.global_memory,

state.session_memory

)

state.session_memory = [] # wipe staging area

# 6. Save everything back to disk/DB

save_state(user_id, state)How Different Tools Implement This

The core idea works everywhere. Whether you use OpenAI, LangGraph, Google, or build it yourself, the five-stage pattern stays the same. Only the technical details change.

Note for non-developers: The next section gets technical. If you just wanted to understand the concept, you've got it. If you're building this, keep reading.

Full tutorials for each framework are coming soon. For now, here's a quick roadmap.

OpenAI Agents SDK - "State + Hooks + Tools"

Best for: Teams already invested in OpenAI's ecosystem.

Key strength: The SDK makes state explicit and provides hooks at each lifecycle stage.

Stage Implementation State A structured object (like AgentState) holding profile + global/session memory Injection A hook that runs at conversation start, rendering YAML profile + Markdown notes Distillation A tool the agent can call to write new memory notes Consolidation An end-of-session job that merges notes and cleans up LangGraph - "Memory Lifecycle as a Graph"

Best for: Teams that like visual workflows and want to test each stage independently.

Key strength: Each memory stage is a node, making the flow explicit and debuggable.

[Load State] → [Inject Notes] → [Agent Loop] → [Distill] → [Consolidate] → [Save]Google ADK - "Cloud-Native + Managed Memory"

Best for: Organizations on Google Cloud who want minimal infrastructure work.

Important note: Even with a managed memory service, keep your authoritative state in your own schema (profile + notes). Treat retrieval-based memory as advisory only.

SDK-Free Baseline - "API Calls + Your Own Code"

Best for: Learning the pattern, production systems with minimal dependencies, or custom requirements.

This is what all frameworks reduce to:

- state stored in a database

- a prompt builder for the injection step

- a second LLM call (or structured output parser) for distillation

- a consolidation function to dedupe and forget

Compare & Contrast: Which Framework?

| Dimension | OpenAI SDK | LangGraph | Google ADK | SDK-Free |

|---|---|---|---|---|

| Abstraction level | Medium | High | High | Low |

| State visibility | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

| Boilerplate code | Medium | Medium | Low | High |

| Deployment | Anywhere | Anywhere | GCP native | Anywhere |

| Best use case | Precise control | Multi-step workflows | GCP-first teams | Learning + control |

Key Lessons I Learned

Memory bugs are usually state bugs.

If an agent behaves oddly, the root cause is usually stale notes, missing conflict-resolution rules, or messy consolidation. Debugging state is easier than debugging the model.

Forgetting is mandatory.

Memory stores grow noisy without aggressive pruning. Set TTLs, archive old notes, and delete low-confidence entries. Memory without forgetting becomes a liability.

Frameworks don't solve memory design.

OpenAI SDK, LangGraph, Google ADK, or plain API calls - they're all vehicles for the same pattern. The architecture and safety rules matter more than the framework.

When NOT to Use Long-Term Memory

Honest reality: you probably don't need it if:

- Sessions are one-off tasks (each use is independent)

- Personalization doesn't materially change the outcome

- The memory adds security/privacy risk without clear value

Simple test:

If remembering something from the last session wouldn't materially improve the next session, don't store it.

A one-time request? No need to remember. A recurring preference that improves every session? Worth storing.

Key Takeaways

- Five-stage lifecycle: State → Inject → Distill → Consolidate → Forget

- Explicit storage: Treat memory as code, not magic

- Precedence rules: Define what overrides what (session > global > default)

- Aggressive pruning: Memory without forgetting becomes noise

- Framework-agnostic: The pattern works everywhere

What's Next?

Tutorials coming soon for:

- OpenAI Agents SDK implementation + code examples

- LangGraph workflow with memory nodes

- Google ADK integration guide

- SDK-Free baseline from scratch